21 Feb When Docker lost our mDNS packet in the Operating System harbor

When Docker lost our mDNS packet in the Operating System harbor

Charles-Antoine Couret

21/02/2024

Context

My current customer is CMC, a company which designs and builds controllers for industrial compressors. These big machines are compressing air to perform different actions in the industry and a lot of physical parameters must be monitored such as temperature, pressure, humidity, dewpoint, power, etc. to be able to produce compressed air efficiently and to improve the reliability of these machines.

The project here is to build an ecosystem to monitor this data from different kinds of sensors, then to send the result to the cloud, and finally to make reports, check if devices are working well and predict failures.

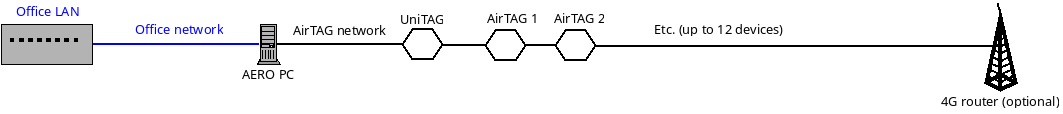

This ecosystem has these components which are relevant for this story:

- A little x86 PC to display locally some data on a touch screen. The data is coming from other machines locally over Ethernet. This product is called AERO PC.

- Inside the AERO there is a board, based on an i.MX6ULL, to fetch data from other devices over the network and do some processing before sending this data to the AERO PC or the cloud. This device is called UniTAG. It is separate from the AERO PC because it can be used as a standalone device as well.

- Each compressor has its own monitor, also based on an i.MX6ULL, to get some data via sensors or Modbus. Its data is sent to the cloud and to the local device manager over Ethernet.These devices are called AirTAG.

The idea behind the PC is to be able to work without cloud connection: local monitoring and sending some commands. Thus it is possible to work offline, which is sometimes required by the customer for security / privacy reasons, and sometimes because the connectivity can be really bad in these factories.

Technical design

The AERO PC can be simultaneously connected on two different networks called office and airtag. They are not bridged. The office network would be the network of the factory to allow remote control (over VNC or HTTP) and maintenance like performing an update. On the airtag network, all devices of the ecosystem are connected together and optionally connected to a 4G router to be able to send data to the cloud. The airtag network is internal to the AERO devices, no other devices are connected to it.

With this design, security issues on our devices can’t impact the network of the factory, and employees in the factory can’t use the 4G connection (accidentally or intentionally).

Communication between all devices (AERO PC ↔ UniTAG or UniTAG ↔ AirTAG) is performed with the gRPC protocol. To be able to communicate together without hardcoding IP addresses in the system, devices use a hostname based on their serial number. We’re using Avahi as the AutoIP and mDNS resolver to configure the network if no DHCP server is present (which can be the case sometimes). It allows to reach a device with its .local name which is very convenient.

The AERO PC is a copy of the cloud stack but running locally only with some little adaptations for this purpose. The main application is based on Docker to start nginx as Web server, PostgreSQL as database manager, the gRPC application to fetch data from UniTAG and a home made backend application. On top of it runs a Qt / QML application which is just drawing a WebEngineView to display the web application sent by nginx + some additional information.

This AERO PC is running a custom Yocto-built firmware to have only relevant components for our use case.

Issue

One important feature of this system is to be able to display AirTAG dashboards (via HTTP) from the AERO PC by clicking on their names in the interface. This is required to have more information about a specific compressor which is not provided by the global overview filled by the UniTAG.

The feature works, the web application has the list of the devices to redirect the connection to fetch their dashboards. With some additional rules, the nginx server can even act as a reverse proxy for users who are displaying the interface on their computers from the office network. So without being on the same network they can also display these dashboards.

After some months, some technicians and customers are reporting an issue with this feature, the error “502 Bad gateway” is displayed when they are using the feature. Sometimes it’s happening all the time, sometimes a reboot can fix the problem. It seems to happen more often when the AirTAGs are not connected to the 4G router (so with AutoIP configuration), or if they are on a network with a specific domain like “.customer.local”.

Root cause

After some investigations and being able to force the behavior to be reproducible when the domain on the network is configured into a non default value, the root cause is coming from nginx which is not able to resolve the name of the AirTAG. So it can’t find their IP addresses based on this ‘.local’ information, even when the device is on the network and reachable.

At the same time, the gRPC application has no problem to resolve these names if we try to perform these requests. So the AERO PC is certainly able to resolve these names.

The first idea was to provide in the nginx container an access to avahi socket from the host operating system as is done for gRPC to be able to resolve these names. Indeed, Docker blocks mDNS packets by default so running avahi inside the container doesn’t work. Unfortunately the issue is still there. The second idea was to try to move from the bridged network provided by Docker into ‘host mode’ to allow the container full access to the network. Despite this change, no improvement was observed…

After reading the documentation and code of nginx, we discovered that nginx has its own resolver which is in fact relying on DNS information only, and not on mDNS as provided by the operating system’s glibc resolver. That’s explaining why our previous solutions didn’t change anything. This explains why it’s not working, but raises the question how this feature could ever have worked at all?

In fact, local routers or gateways often have their own DNS server which serves as a cache for global names, but also injects local device names. In that case a device A.local which is looking up device B.local can perform a DNS request to the local gateway which is giving back the result. This is transparent for the devices on the network to resolve these local names, and this feature is often used to map a service name to a local machine without configuring each device on the network.

This is explaining why AirTAG with AutoIP settings (and thus no configured DNS server) have this issue.

To explain why changing the domain setting on the network is impacting the feature, we can reuse the same explanation. The router adds the entry “.domain” name with IP address in its lookup table for each device on the network. Nevertheless our devices are looking for “.local” only which can work if the domain is not configured or set to “local”.

nginx is relying on this information which is not always available, how to solve this issue now?

systemd to resolve the names… and this issue

The solution requires to merge mDNS and DNS data together and for this to be reachable over DNS.

Docker has its own local DNS server but it only provides aliases for the local containers, to make a link between containers without need to configure IP addresses. So it’s too limited for our purpose, and Docker is not using mDNS at all.

dnsmasq is also a well known free software which can act as a local DNS server. But even if some work was started to add mDNS information to it, nothing was merged and the existing patches are quite old.

Finally it looks like the integrated solution does exist: systemd-resolved . This component is acting as domain resolution manager by grouping DNS + mDNS + LLRM (a Windows protocol similar to mDNS) together and acting as the DNS server for localhost (rather than a resolver like glibc), forwarding requests to the network if it does not have the information locally.

The counterpart of this feature is that systemd-resolved needs to change other things in the system to be able to work well. It needs the init manager to be systemd, which means the way to start all services at boot time on AERO is different and must be adapted to it.

But even with this, some time was required to integrate everything. Docker detects when systemd-resolved is used as local DNS server (with IP address 127.0.0.53 in /etc/resolve.conf file) to be able to bypass it with its own DNS server. The workaround is to start systemd-resolved to listen on local requests + Docker requests (by setting DNSStubListenerExtra=172.17.0.1 on a virtual Docker network). In that case Docker can’t bypass it and everything would work fine. But this feature is available since systemd-resolved 247.6 and our Yocto layer is using the version 241. So a backport was necessary until making an upgrade of the yocto layer (which was performed since).

Now also avahi can be removed – otherwise we have two services managing the mDNS information together.

Finally, in the nginx container, it’s possible to ping a device on the network which means the design seems to work. Unfortunately nginx itself was still failing to perform those requests. The solution was to disable IPv6 at all in nginx + Docker to avoid any problems in the name resolution.

/etc/docker/daemon.json looks like:

{

"dns": [

"172.17.0.1"

],

"ipv6": false

}

…a new issue appeared! NetworkManager GOOOO!

AERO PC in the field can have several network settings:

- By default: search a DHCP server on office and airtag networks; if the DHCP server is unavailable, fallback to local link automatically.

- Static IP addresses on office and/or airtag networks with different possibilities there.

With previous changes, NetworkManager was introduced to configure the network instead of ifupdown because NetworkManager is able to give DNS settings to systemd-resolved based on network configuration or DHCP server info. Firstly the mDNS must be enabled for this service with the connection.mdns=2 property.

NetworkManager has a plugin to handle ifupdown config files which was ideal for us. Unfortunately the compatibility is pretty limited. In AERO PC, the airtag network is configured as a bridge to airtag0 and airtag1 physical interfaces, because some of them have two physical interfaces (one over PCIe, another one over USB). The plugin is just able to link together the physical interfaces but not to get the network configuration from the bridge itself.

So a script was written to convert this old config file to the new one without breaking the existing configuration in the field in case of updates.

The remaining issue was, NetworkManager is not able to configure an AutoIP address when the DHCP server is not answering. And it does not call its scripts or DHCP client scripts when this is happening. So the idea was to add a service to monitor airtag0 and airtag1 physical status, then call the application avahi-autoipd to autoconfigure the IP address in this case. Because NetworkManager and avahi-autoipd are not very well integrated anymore, the AutoIP address can be kept over time even when the DHCP sent relevant information in the meantime. To solve this issue, the idea is to move to two profiles: one by default with DHCP (or static IP address) settings, if after 5 minutes it’s not able to configure the network, it falls back to the next profile which uses AutoIP.

In the meantime, the issue was fixed upstream in NetworkManager by providing an option to fall back to AutoIP. Thus, the fallback configuration can be removed after upgrade to NetworkManager 1.39.6 (AERO PC used version 1.16).

The NetworkManager.conf file looks like:

[main] dhcp=dhclient dns=systemd-resolved plugins=keyfile no-auto-default=office,airtag,airtag0,airtag1 ignore-carrier=false [connection] connection.mdns=2

The office profile file office.nmconnection:

[connection] id=office uuid=ea023738-1232-3c63-ba13-1d526700b9bc interface-name=office type=ethernet autoconnect-priority=50 permissions= [ipv4] dns-search= method=auto [ipv6] addr-gen-mode=stable-privacy dns-search= method=ignore

And the office profile for AutoIP called office-linklocal:

[connection] id=office-linklocal uuid=ea023738-1232-3c63-ba13-1d526700b9bd interface-name=office type=ethernet autoconnect-priority=0 permissions= [ipv4] dns-search= method=link-local [ipv6] addr-gen-mode=stable-privacy dns-search= method=ignore

The priority property is really important here, this is using office first before falling back to the office-linklocal profile. But there is a little issue to solve, NetworkManager is not able to deal with carrier change from bridge interfaces. A solution for this is to monitor the carrier of airtag0 and airtag1 interfaces separately with ifplugd, which is calling the following script when a carrier activity is detected:

#!/bin/sh

IFACE=$1

STATUS=$2

if [ "${IFACE}" = "airtag0" ] || [ "${IFACE}" = "airtag1" ]; then

IFACE="airtag"

fi

# Disconnect linklocal if the cable is removed, to try the DHCP connection for the next minutes

# Otherwise the bridge is stuck with linklocal forever until next reboot which is painful

if [ "${IFACE}" = "airtag" ]; then

if [ "${STATUS}" = "up" ]; then

nmcli device connect "${IFACE}"

nmcli connection up id "${IFACE}"

else

nmcli connection down id "${IFACE}-linklocal"

nmcli connection up id "${IFACE}"

fi

# Allow the bridge to support mDNS

echo 1 > /sys/class/net/$IFACE/bridge/multicast_querier

fi

And this is finished, the solution works fine in all cases. It was a month of digging into source code to find out what went wrong, fiddling with configuration files, and integrating new tools. But the end result is a much more stable and understandable system. And the issue is fixed! Champagne!

Presentations